Welcome, AI Insiders!

Expect new defaults. Microsoft is building its own brains. OpenAI is turning voice into production infrastructure. Meta says study habits can beat sheer size. And Anthropic just set your chats to five years by default unless you opt out.

📌 In today’s Generative AI Newsletter:

Microsoft launches MAI Voice 1 and MAI 1 preview for Copilot

OpenAI ships Realtime voice with SIP and tool use

Meta’s Active Reading boosts recall and releases code and data

Anthropic moves consumer chat retention to five years

🤖 Microsoft AI Debuts Its First In-House Models

Image source: Microsoft

Microsoft just introduced its first homegrown AI systems, marking a turning point in its uneasy partnership with OpenAI. The new models, MAI-Voice-1 and MAI-1-preview, are designed to power Copilot and eventually compete with the likes of GPT-5 and DeepSeek.

Here’s what’s inside the launch:

MAI-Voice-1 generates a full minute of audio in under one second on a single GPU, and is already running Copilot Daily’s AI news host and podcast-style explainers.

User access comes through Copilot Labs, where anyone can type a script, pick a voice, and hear the model perform it in different speaking styles.

MAI-1-preview was trained on roughly 15,000 Nvidia H100s and is being tested on LM Arena. It is tuned for everyday queries and natural instruction-following.

Consumer first is the strategy, according to AI chief Mustafa Suleyman, who says Microsoft’s vast advertising and telemetry data make it possible to build models designed for personal use, not just enterprise.

The models mark Microsoft’s move to reduce reliance on OpenAI and diversify the brains behind Copilot. If MAI-1-preview lives up to its billing, the company could start shaping an ecosystem of specialized agents under its own control, a shift with big implications for who actually owns the future of consumer AI.

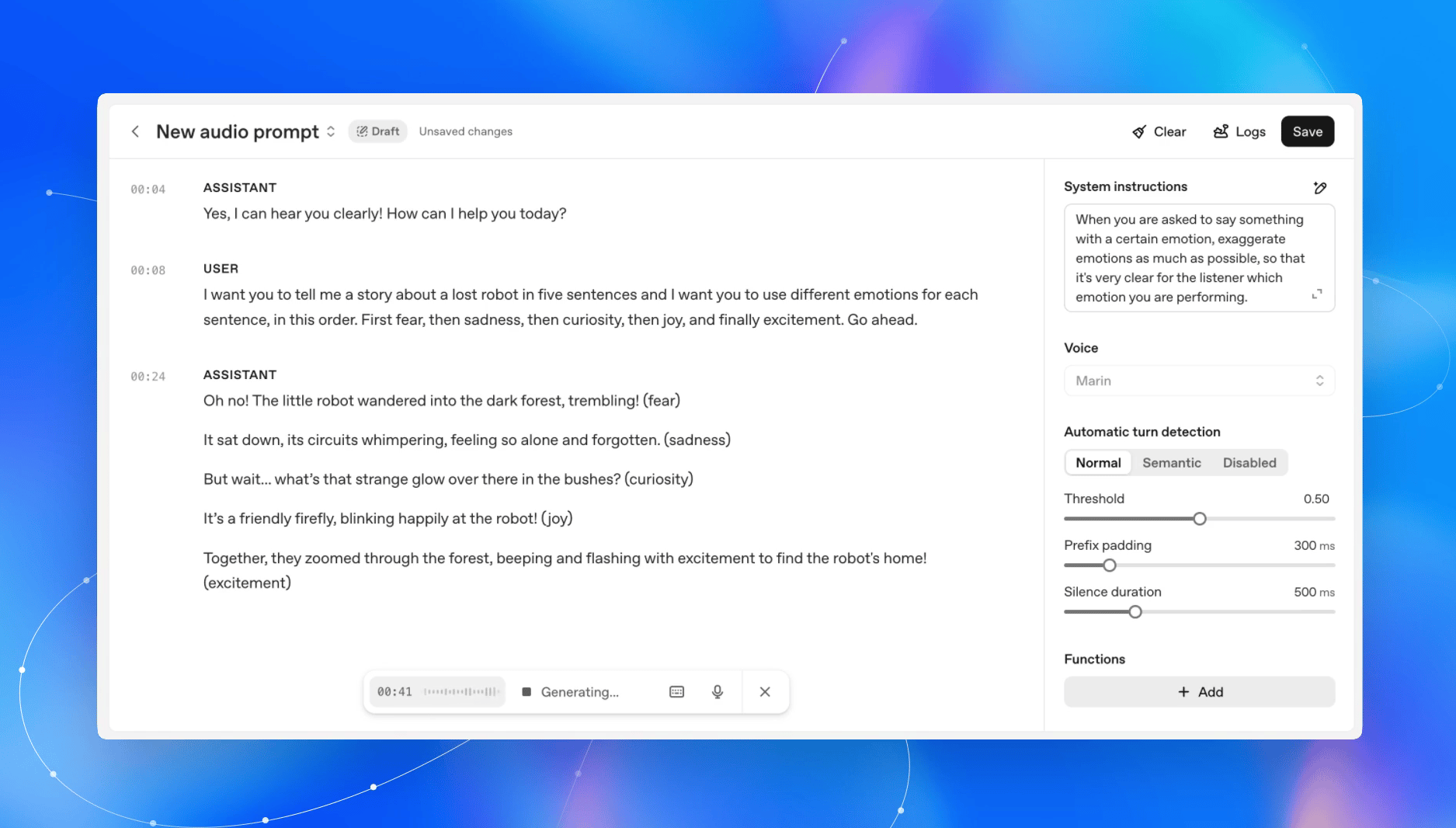

🎙️ OpenAI Launches GPT-Realtime for Production Voice Agents

Image Source: OpenAI

OpenAI has pushed its Realtime API into full release with a new speech-to-speech model and expanded capabilities that bring AI voice agents closer to human-like interaction. The upgrade packs MCP server support, image inputs, and SIP phone integration, making it easier for companies to deploy assistants that actually hold their own in real-world calls and conversations.

Here’s what’s rolling out:

New voices: Marin and Cedar debut with richer intonation and emotion, while all eight existing voices have been upgraded for more natural flow.

Sharper reasoning: On Big Bench Audio, GPT-Realtime scores 82.8%, up from 65.6% last year, with stronger performance on alphanumerics, multi-step tasks, and language switching.

Instruction discipline: Accuracy on MultiChallenge Audio climbs to 30.5%, meaning the model follows developer prompts with more precision in customer-facing settings.

Smarter tool use: Function-calling accuracy jumps to 66.5%, and asynchronous calls keep conversations fluid while background actions run.

Early adopters like Zillow, T-Mobile, StubHub, Lemonade, and Oscar Health are already weaving GPT-Realtime into their services. For Zillow, it means agents that can narrow listings by lifestyle or guide financing conversations with its BuyAbility score. OpenAI is turning voice from novelty into infrastructure.

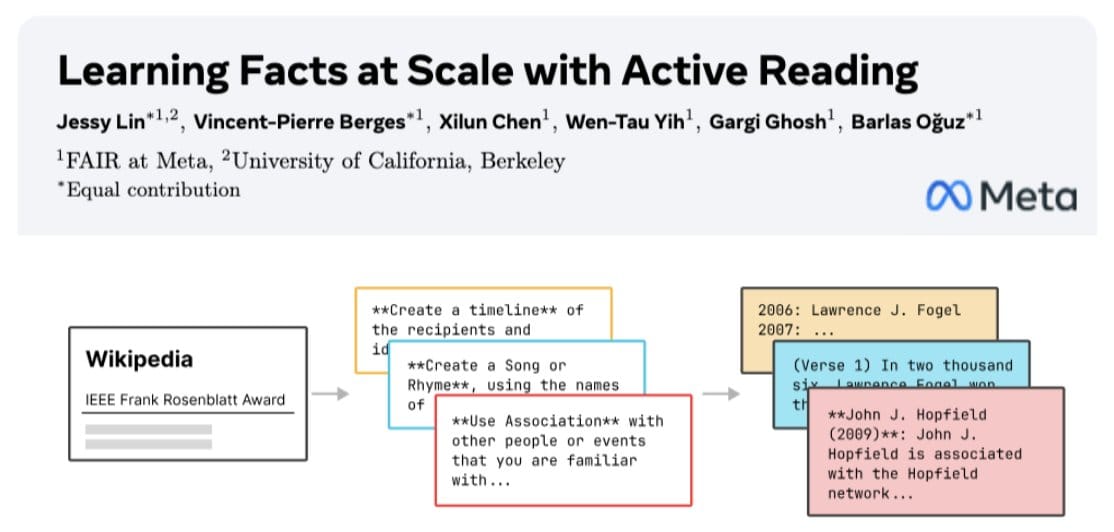

📙 Meta Teaches LLMs to Study Like Humans

Image Source: Meta’s research paper “Learning Facts at Scale with Active Reading”

Meta researchers have introduced Active Reading, a training method that pushes models to learn like humans rather than memorize blindly. Instead of passively ingesting documents, the model creates its own study routines like paraphrasing, making analogies, writing timelines, even self-quizzing to retain knowledge with surprising efficiency.

Here’s what’s new:

Smaller beats bigger: An 8B WikiExpert model trained with Active Reading surpassed DeepSeekV2 (236B) and Llama 3 (405B) on factual recall benchmarks.

Recall surge: On SimpleQA, Active Reading boosted scores by +313% compared to standard fine-tuning.

Scaling that sticks: Unlike synthetic QA data, which quickly plateaus, this method improves as more data is added.

Open to all: Both the WikiExpert model and the 1T-token dataset are being released for the research community.

The implications are clear: smarter training could outweigh brute-force scale. If small, specialized models can match or even rival giants, the door opens for powerful AI that’s cheaper, open-source, and deployable far beyond today’s hyperscale labs.

🧾 Anthropic’s Data Grab Stirs User Backlash

Image Credits: Maxwell Zeff

Anthropic is facing mounting criticism after announcing that all Claude consumer chats and code will now be retained for five years and used for training unless users actively opt out by September 28. The update reverses previous promises of 30-day deletion, and it follows a string of unpopular changes that many users already call “rugpulls.”

Here’s what users are pointing to:

Shrinking benefits: Max plan limits were halved six weeks ago without clear communication, and “5x/20x” usage plans reportedly deliver closer to 3x/8x.

Claude Code frustrations: Users complain that Opus 4 is absent from Claude Code, repos tied to it have faced DMCA takedowns, and Windsurf offers no Claude 4 access.

Opaque quotas: Weekly usage caps have been imposed without concrete numbers, leaving users guessing at their limits.

Surprise data retention: Extending storage from 30 days to five years, with training turned on by default and hidden behind a small toggle under a large “Accept” button.

Anthropic frames the shift as a way to “improve model safety” and boost Claude’s coding, reasoning, and analysis skills. But the real driver is clear: access to millions of real conversations and code snippets could help Anthropic close the gap with rivals like OpenAI and Google.

🚀 Boost your business with us. Advertise where 13M+ AI leaders engage!

🌟 Sign up for the first AI Hub in the world.