Welcome back! The future of intelligence is starting to feel personal. Companies are giving AI missions, morals, and even meaning. Labs are racing to decode the human mind while researchers ask what happens when machines begin to understand us too well. The story of AI is now about identity, and who gets to define it.

In today’s Generative AI Newsletter:

• Microsoft launches a new team to build “Humanist Superintelligence”

• Sam Altman rejects reports of a potential government bailout

• China’s Moonshot AI releases an open model that rivals GPT-5

• Scientists develop “mind-captioning” AI that translates thoughts into text

Latest Developments

Microsoft Launches Superintelligence Team to Build “Humanist AI”

Image Credit: Getty Images

Microsoft AI CEO Mustafa Suleyman has announced the MAI Superintelligence Team, a new division focused on developing AI systems that outperform humans in specific areas like medicine and energy. The project aims to build what Suleyman calls “Humanist Superintelligence,” or AI built to serve human goals rather than pursue open-ended general intelligence.

What’s Microsoft’s Plan?

Focused Mission: The team will develop specialized AI systems for healthcare, education, and clean energy, tackling real-world challenges over abstract research.

First Goal in Medicine: Within two to three years, Microsoft aims to build medical superintelligence capable of earlier diagnosis and improved treatment outcomes.

Leadership and Talent: Suleyman appointed Karen Simonyan as chief scientist, bringing in researchers from DeepMind, OpenAI, and Anthropic to lead the work.

Strategic Independence: The launch follows Microsoft’s new arrangement with OpenAI, giving both freedom to pursue separate paths in superintelligence research.

Suleyman’s announcement gives Microsoft’s AI vision a distinct identity for the first time. This is rooted in pragmatism and ethics rather than pure ambition. Microsoft is positioning itself to lead the next phase of AI by framing “superintelligence” as a tool for human advancement instead of an abstract frontier.

Special highlight from our network

Static video is over.

Audiences expect more than talking heads and timelines.

With AI-powered live avatars, you can deliver real-time, responsive videos that adapt to every viewer, every time. No actors. No edits. Just personalized storytelling that actually moves people.

This isn’t a clip. It’s a conversation.

Sam Altman Denies That OpenAI Is Looking Into Government Bailout

Kevin Mazur/Getty Images for TIME and Brian Lawless - Pool/Getty Images

OpenAI CEO Sam Altman said Thursday that the company does not want or need a government safety net for its massive $1.4 trillion data center buildout. He rejected the idea of federal loan guarantees after comments from OpenAI CFO Sarah Friar sparked public backlash. Friar had suggested the U.S. government could “backstop” OpenAI’s infrastructure loans to make financing cheaper, before walking back her remarks.

Here’s what happened:

The Comments: Friar floated the idea of government-backed loans to lower the cost of OpenAI’s hardware expansion, calling AI a “national strategic asset.”

The Clarification: After criticism online, Friar said OpenAI was not seeking a government guarantee and admitted her word choice had “muddied the point.”

Altman’s Response: Altman reaffirmed that OpenAI will not seek bailouts, saying governments should not “pick winners or losers” or rescue companies that mismanage funds.

Industry Context: Trump’s AI advisor David Sacks also dismissed the notion, saying the U.S. will not bail out AI firms, only streamline energy and permitting for the sector.

Altman’s statement underscores OpenAI’s desire to appear self-reliant amid ballooning infrastructure costs and rising political scrutiny. Still, with trillion-dollar ambitions and fierce competition, OpenAI’s confidence rests on its ability to grow faster than its expenses, a challenge even the most fluent AI cannot negotiate away.

A New Open-source Model China to the Frontier, Beating GPT-5 and Claude 4.5

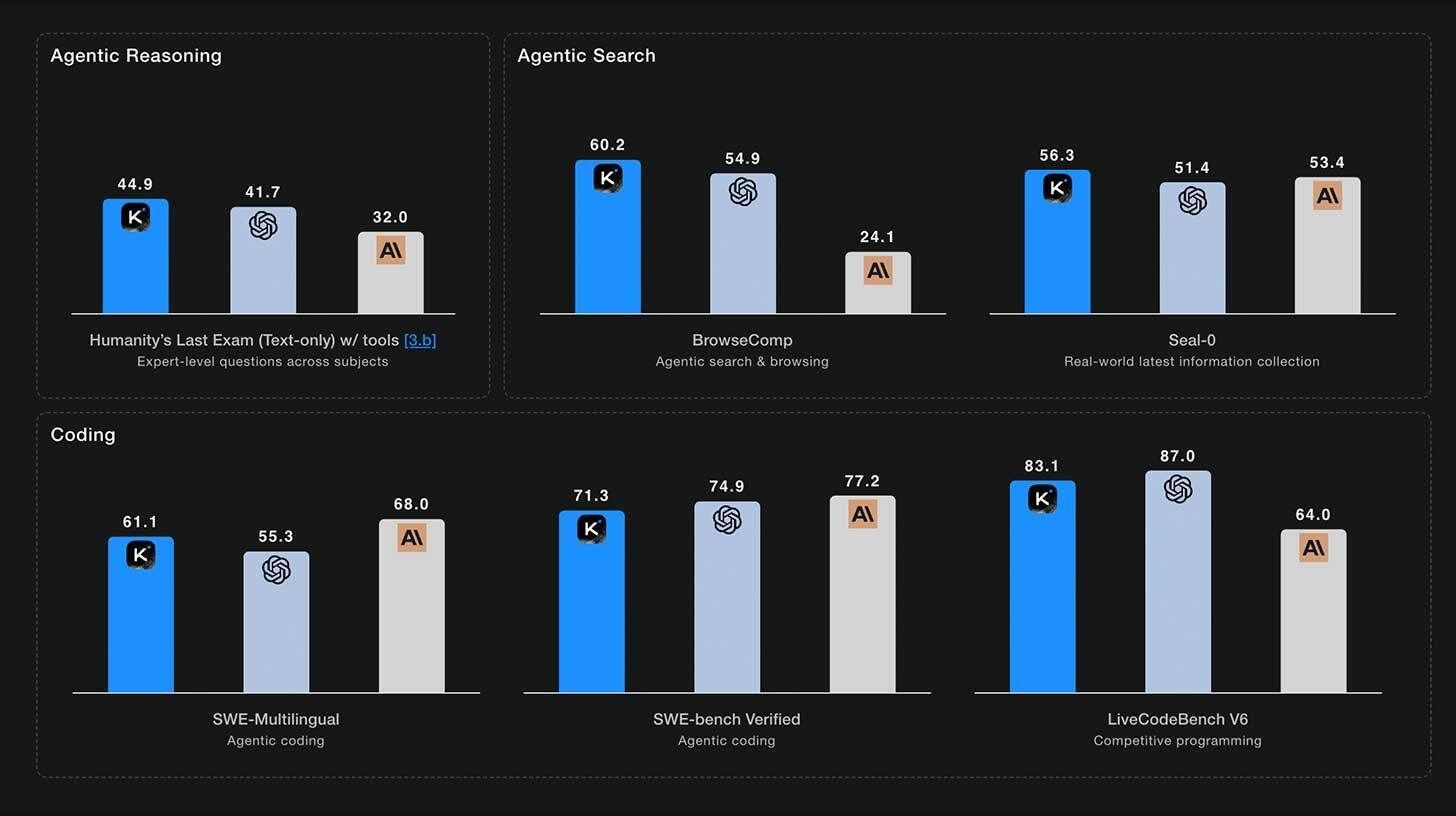

Image Source: moonshotai

Alibaba-backed Moonshot AI has released Kimi K2 Thinking, an open-source reasoning model that matches or surpasses GPT-5 and Claude 4.5 Sonnet on several benchmarks while costing a fraction to train. The model scored 44.9% on Humanity’s Last Exam, setting a new record and outperforming major frontier systems on agentic and reasoning tasks.

Here’s what stands out:

Frontier-Level Reasoning: K2 Thinking outperformed GPT-5 and Claude 4.5 in multi-step reasoning and planning benchmarks.

Creative and Technical Strength: It showed major gains in coding and creative writing, with tool-chaining capabilities reaching 300 sequential calls.

Ultra-Low Cost: The model reportedly cost under $5 million to train, far below the hundreds of millions spent on top Western systems.

Open-Source Release: K2 Thinking is fully open, marking a rare moment where a Chinese lab rivals the global frontier in both quality and accessibility.

The launch lands just days after Nvidia’s Jensen Huang said China was only “nanoseconds” behind in AI progress. With Moonshot’s new model, that gap may have narrowed to nearly zero. If the performance holds up, K2 Thinking could show how powerful and affordable open models can be.

‘Mind-Captioning’ AI Turns Brain Activity Into Text

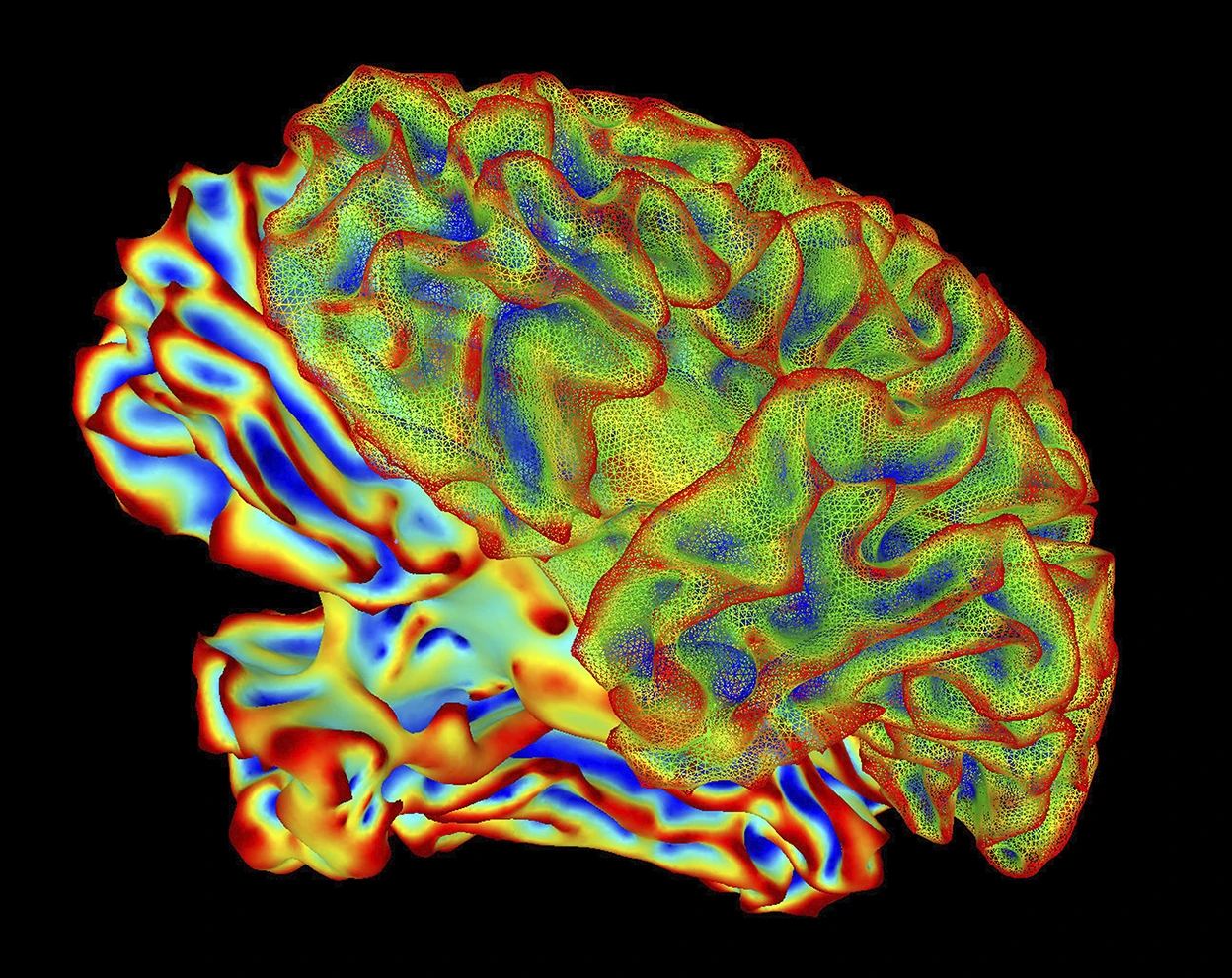

Image Credit: Nature

Scientists have developed a new “mind-captioning” technique that can translate human thoughts into sentences by decoding patterns of brain activity. Using non-invasive fMRI scans, researchers at Japan’s NTT Communication Science Laboratories trained AI models to match brain signals with “meaning signatures,” which are numerical patterns that represent the semantic content of thousands of short videos.

Here’s how it works:

Scanning the Mind: Participants watched over 2,000 videos while researchers recorded their brain activity using functional MRI.

Mapping Meaning: A language model converted video captions into data points that captured context, actions, and subjects.

AI Decoding: The system learned to link each brain pattern to its matching meaning signature, allowing it to predict what someone was picturing or viewing.

Sentence Generation: A text generator then produced full sentences that closely matched the decoded thoughts.

The breakthrough shows that brain imaging can now capture not just what people see, but how they interpret it. Researchers say this could help restore communication for people who lose the ability to speak after neurological injuries. The findings also open new questions about privacy and the boundary between perception and language.

🚀 Boost your business with us. Advertise where 13M+ AI leaders engage!

🌟 Sign up for the first (and largest) AI Hub in the world.

📲 Follow us on our Social Media.