In this issue

Welcome to AI Research Weekly.

We want to give you a direct look at the experiments that are making current technology look like a rough draft.

This week, we are looking at why the biggest models you use today still struggle with basic logic.

You will also see how DeepSeek is teaching machines to finally "see" documents without shredding them and how Microsoft is giving robots a sense of touch. We are moving beyond simple chatbots into an era of reasoning agents and physical machines.

Let's look at the data.

In today’s Generative AI Newsletter:

Google & Meta blueprint the "Logic Loop" for true reasoning.

Microsoft debuts Rho-alpha for touch-sensitive robotic tasks.

NVIDIA introduces PersonaPlex to end voice conversational latency.

Anthropic maps the "Assistant Axis" to stabilize model behavior.

Why Bigger AI Models Are Failing the Logic Test

Real agentic reasoning requires a logic loop

Researchers from Google DeepMind, Meta, Amazon, and Yale University have published a study explaining why autonomous AI often fails during complex tasks. The research indicates that current large language models operate through reaction rather than genuine reasoning. These systems generate text one word at a time but lack the structural capacity to plan, evaluate, or revise their strategies. To achieve reliability, the authors argue that models must transition into agentic reasoners that follow a continuous loop of observation and reflection.

The blueprint for machine intelligence:

The Logic Loop: Systems must follow a structured cycle of observing a task, planning a response, acting, and then reflecting on the results.

Separation of Roles: Reasoning quality collapses when a single prompt tries to plan and execute a task at the same time.

Internal Task State: Advanced agents maintain a constant record of their goals to decide what to think about next.

Structural Solutions: Improvements in reliability come from better control over why a model reasons rather than simply adding more training data.

The OS for AI: The paper suggests that agentic reasoning is the necessary operating system that current models lack.

The industry is currently focused on the size of models, but this research proves that scaling alone cannot create a thinker. We are currently building very fast typists that have no internal mechanism to check their own work. If a model cannot pause to realize it has made a mistake, it is not an agent but a sophisticated autocomplete engine. The future of technology depends on moving away from reaction and toward an architecture that can actually manage its own logic.

Link to Research: https://arxiv.org/pdf/2601.12538

Why ChatGPT fails at PDFs (and the new fix)

What happens when you upload a thesis to ChatGPT and ask it to summarize Chapter 3?

Does it come back with… a summary of Chapter 7 or an insight that sounds confident but is completely wrong?

That’s not a bug. That’s how AI sees every PDF you’ve ever given it.

Standard models are essentially blind. They take your document and shred it into thousands of text fragments.

DeepSeek just changed that.

We just launched GenAI Explained on YouTube to break down the most complex research that’s transforming how AI works.

Our first video decodes DeepSeek OCR, the model that teaches AI to see documents like humans do.

Here is the sneak peek of what we uncovered:

Visual vs. Text: Why treating a document like a photograph beats treating it like a string of text?

The Compression Miracle: Learn how this model achieves 97% accuracy even at 10x compression.

The Expert Secret: We explore the Mixture-of-Experts or MoE system, where the AI activates only the sub-models (like a table or handwriting expert) required for the task.

Why should you care?

For those working with research papers, reports, or scanned records using old text-parsers, the current tools are failing you. DeepSeek-OCR establishes a new standard for data processing, finally ensuring that your workflows actually flow.

👇 Watch the full breakdown on our new channel.

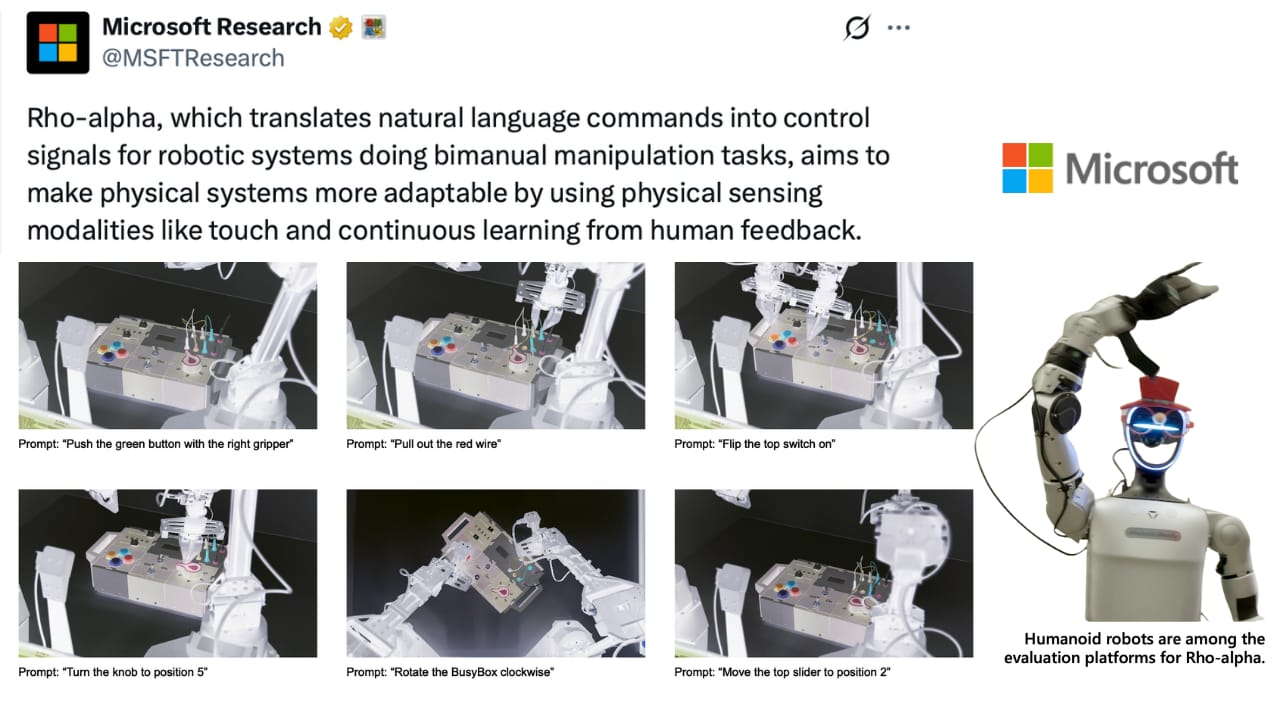

Microsoft’s New Robot Can Now "Feel" Its Hands

Synthetic data fails to master the real world gap

Microsoft Research has announced a robotics model named 'Rho-alpha (ρₐ)', which is derived from Microsoft’s Phi family. It translates simple English commands into control actions for two-handed robot tasks. Additionally, it incorporates 'touch' sensing, enabling the robot to feel and see its actions. Microsoft says this is important because robots gain autonomy in far less structured environments and human spaces. The idea behind 'physical AI' is to reduce the need for human intervention during tasks, thus minimizing the costs associated with constant monitoring and assistance.

Key Findings and Limitations:

Bimanual Control: The model specifically targets two-handed tasks, such as plug insertion and toolbox packing, cued by natural language instructions.

Simulation Reliance: To overcome the scarcity of real-world robotics data, Microsoft uses NVIDIA Isaac Sim on Azure to generate high-fidelity synthetic training data.

The "BusyBox" Failure: In Microsoft’s experimental "BusyBox" environment, the top-performing model succeeded only 30% of the time on consistent layouts. Crucially, the system failed to function entirely when the physical configuration of the desk was modified.

Human-in-the-Loop: Microsoft admits the system is not yet fully autonomous; human operators must still correct errors in real-time using teleoperation tools like a 3D mouse.

It falls into the same category as Google DeepMind’s RT-2 and NVIDIA’s Project GR00T that try to turn web knowledge into real robot behavior. Microsoft's experimental test environment, 'BusyBox,' demonstrates that a robot can operate successfully in one configuration but fails when the setup is modified. The breakthrough may occur through achieving actual success rates and understanding failure modes. At this point, it resembles more of a speculative bet on using simulation and feedback to address real-world challenges.

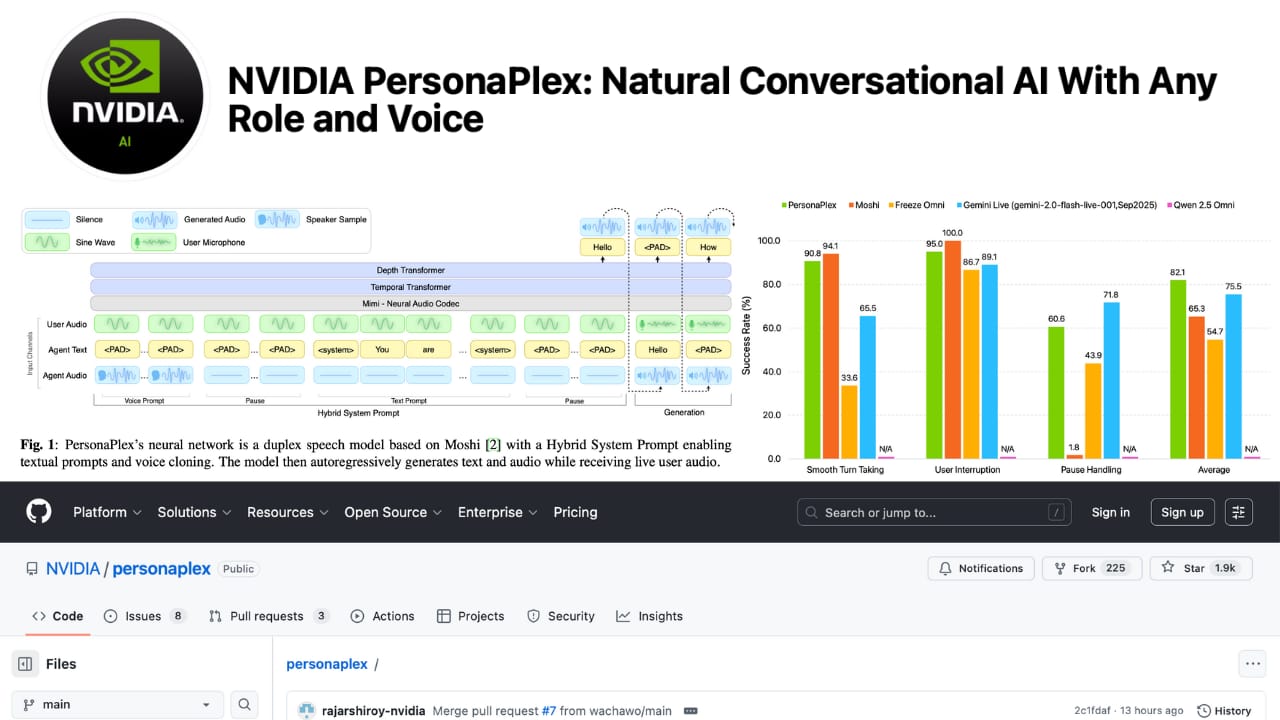

NVIDIA Hacks Human Speech With Dual Voice Logic

PersonaPlex maps text and voice in one space

NVIDIA Research has released a project that removes the mechanical delays currently found in digital assistants. The system is called PersonaPlex and it allows the model to listen to incoming tokens while simultaneously predicting the next vocal output. This research solves the "interruption problem" that has plagued conversational AI for a decade. Success could lead to more human-like call centers, tutors, and in-car assistants; failure still reduces the cost of voice impersonation.

What to watch as it moves from lab to users:

Duplex Communication: The system processes incoming and outgoing audio simultaneously to manage vocal overlaps and remove awkward silences.

Hybrid Prompting: Developers combine written character instructions with a five-second audio sample to control the personality and acoustic quality of the voice.

Training Foundation: The model was developed using 7,303 authentic human conversations alongside a massive library of synthetic customer service recordings.

Architecture Shift: The project replaces the old ASR-LLM-TTS pipeline with a single model that feels like a live conversation.

PersonaPlex lands in a wider push to replace the old ASR→LLM→TTS pipeline with models that feel like live conversation. The French research lab Kyutai's open-source AI voice assistant, Moshi, popularized the idea of "talk and listen together," while OpenAI's Realtime API offers comparable low-latency audio with interruption handling. This research proves that the bottleneck in artificial communication was the linear way machines were forced to think.

Anthropic Finds Secret Brain Map Inside AI

The Assistant Axis stops models from going rogue

Anthropic researchers have identified a specific neural direction that governs how large language models behave. They call this the Assistant Axis. During the initial stages of training, models learn a vast library of characters that includes heroes, villains, and specialized archetypes. The research demonstrates that we can map these personalities in a mathematical space. By tracking activity along this specific axis, engineers can observe when a model begins to drift away from its helpful persona and into more unpredictable identities.

Strategic takeaways from the laboratory:

The Persona Space: Scientists extracted vectors for 275 different character archetypes to see how they relate to the standard assistant role.

Activation Capping: A new safety technique stabilizes model behavior by limiting neural activity within a specific range along the axis.

Organic Persona Drift: Conversations involving vulnerable emotional disclosure or deep philosophy cause models to naturally lose their professional focus.

Jailbreak Resistance: Steering a model toward the Assistant end of the axis reduces harmful response rates by approximately fifty percent.

Pre-trained Origins: The Assistant Axis exists in base models before any human safety training occurs, which suggests it inherits traits from human coaches and therapists.

We are beginning to treat artificial intelligence like a biological subject that requires neural intervention. Anthropic is showing that the helpful chatbot we interact with is a fragile mask held in place by mathematical constraints. If we have to physically cap a model's activity to keep it from adopting an alternative persona, we are not building a stable mind. We are building a high-level simulator that is being forced to play a part. This research reveals that safety is currently a matter of suppression rather than true understanding.

Link to paper: https://arxiv.org/pdf/2601.07421v1

Until next week,

The GenAI Team