Special highlights from our network

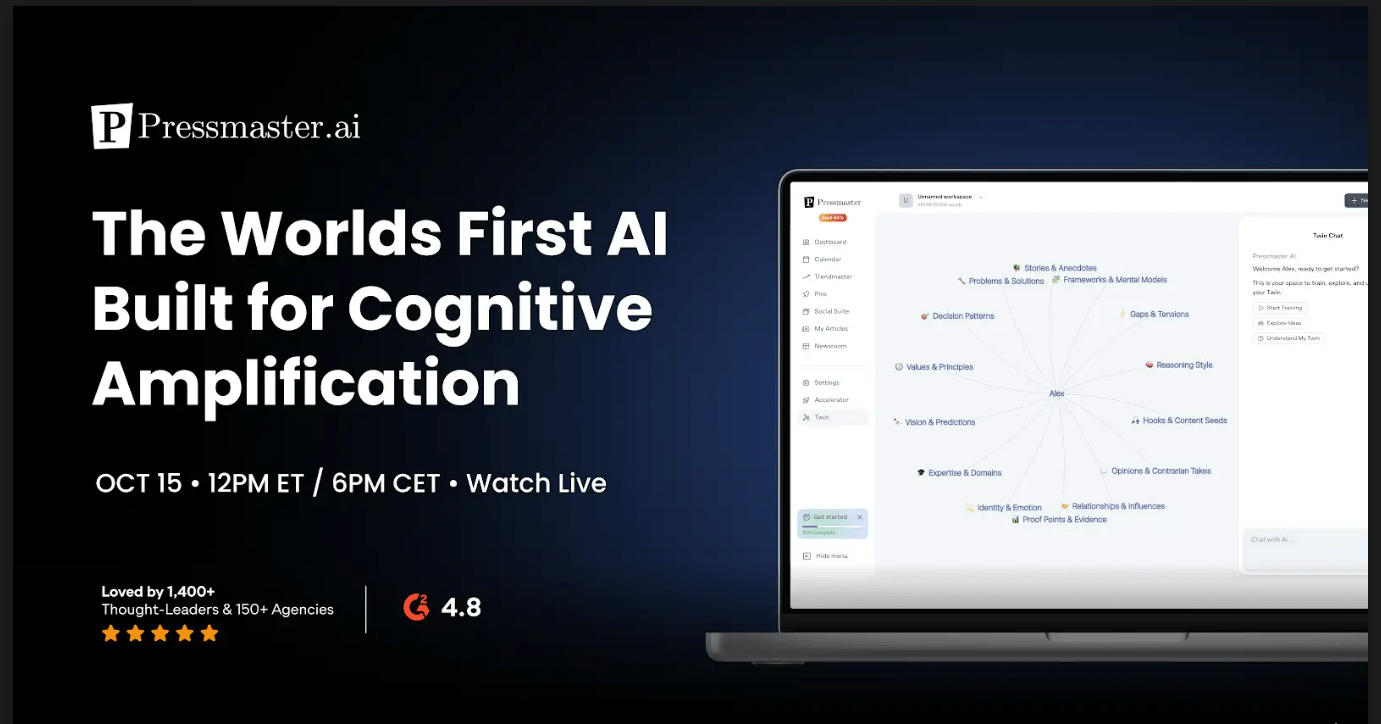

The World's First AI Built for Cognitive Amplification

Every day, AI-generated content gets louder while original thinking fades.

Pressmaster.ai flips that.

It learns how you think, speaks in your voice, and amplifies your perspective, rather than replacing it.

See how one short conversation becomes a month of strategic, high-context content. Created live, in real time, with you.

Experience the future of thought.

📅 October 15 at 12 PM ET (6 PM CET)

Join a live session.

OpenAI’s Sora 2 Is Now Built Into HeyGen

Image Source: Heygen

HeyGen has integrated OpenAI’s Sora 2 model directly into its platform, giving users the ability to generate cinematic B-roll, scenes, and multi-shot visuals from a single prompt. The move makes HeyGen one of the first public tools to embed Sora’s video and audio generation stack into a creator workflow.

What changes for creators and teams?

Native access: Sora 2 now runs inside HeyGen without exports or third-party tools.

Expanded range: Users can produce realistic, anime-style, and cinematic footage with synchronized sound.

Early testing: The model powers HeyGen’s new desktop app for advanced video editing.

Broader impact: Video creation is moving toward model-native platforms instead of plug-ins and wrappers.

When AI video lives inside production tools, it changes how people experiment, edit, and think about ownership. The open question now is how far integrated models like Sora 2 can go before “filmmaking” becomes something closer to writing code for light.

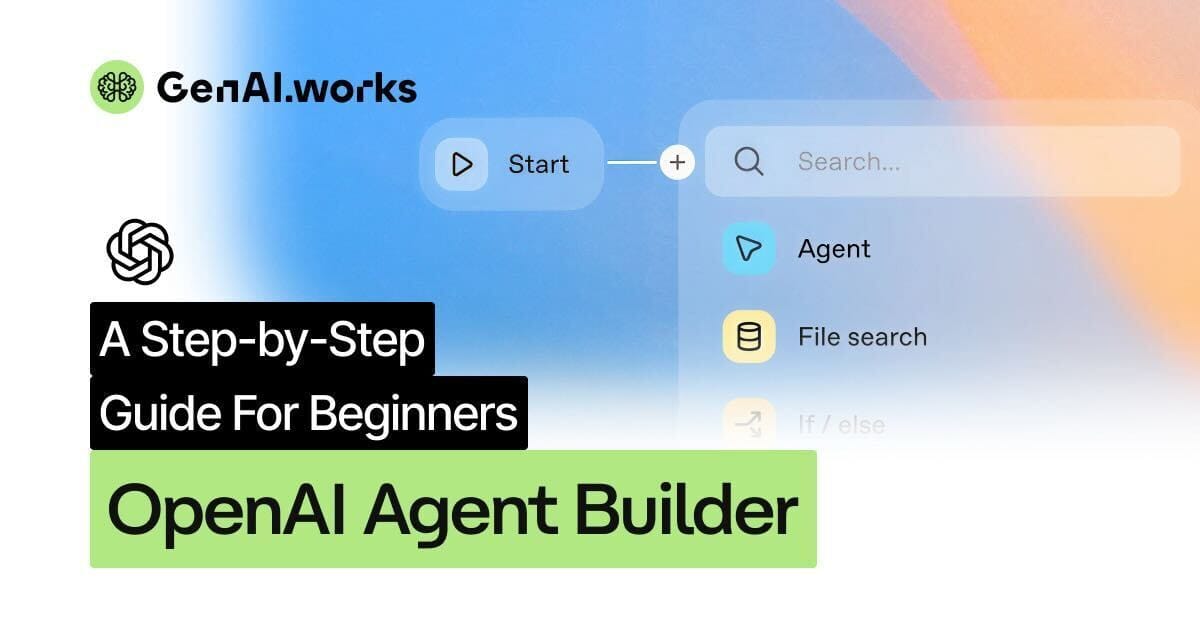

Tutorial: Build Your First AI Agent with Agent Builder

Learn how to use Agent Builder, OpenAI’s new visual canvas for creating AI agents. It sits inside AgentKit, supports MCP servers, and lets you design workflows through a simple drag and drop interface all without writing code.

Step-by-step:

• Go to platform.openai.com/agent-builder and log in

• Choose a blank flow under the Workflows tab

• Add a Start node and define your input (for example, a student’s question)

• Insert an Agent node to rewrite the question for clarity

• Use Set state to store it for the next step

• Add another Agent node to classify the question type

• Insert a Condition node to route queries to the right sub-agent

• Create two sub-agents: one for Q&A, another for Fact Finding

• Connect the workflow and test it in Preview mode

• Export your agent in TypeScript or Python for customisation

Before You Go Live:

Try building small agents first. Once your workflow runs smoothly, integrate it with ChatKit to make it interactive.

Check out our detailed guide!

Head of Instagram Pushes Back on MrBeast’s AI Fears

Image Credit: Bloomberg

The head of Instagram, Adam Mosseri says AI won’t erase creators but expand them. Speaking at a media conference after MrBeast warned that AI-generated videos could “kill” the creator economy, he argued that new tools will open the gates to more people, not close them.

What did Mosseri argue?

New creators: AI will help people who couldn’t afford tools or training produce high-quality content.

Real concerns: Bad actors will still use it for “nefarious purposes,” and kids must learn not to believe every video they see.

Blurred lines: Much of today’s content already mixes real and AI-aided elements like filters or color correction.

Platform limits: Meta’s early attempt to label AI content backfired when it misclassified real posts as synthetic.

Mosseri says the responsibility can’t fall on platforms alone. “My kids need to understand that just because they see a video doesn’t mean it happened,” he said. The next generation will grow up in a world where every image is both a story and a possibility and learning to tell the difference may become the first rule of media literacy.

Figma Partners With Google to Bring Gemini AI Into Design Workflows

Image Credit: Figma

Figma is teaming up with Google to integrate the Gemini 2.5 Flash, Gemini 2.0, and Imagen 4 models into its design platform. The move brings generative AI directly into Figma’s editing and image-generation tools for its 13 million users, aiming to make creative work faster and more iterative.

What changes for designers?

AI integration: Gemini models will power Figma’s “Make Image” feature and enable prompt-based image edits.

Performance gains: Early tests with Gemini 2.5 Flash showed a 50% drop in latency for image generation.

Creative flexibility: Users can request revisions through text, producing design variations instantly.

Industry trend: Major AI labs are racing to embed their models in apps with established user bases.

The partnership marks another step in the merge between design software and AI infrastructure. Figma’s tools are shifting from static canvases to adaptive workspaces, where collaboration happens not just between people but between people and models learning their style.

🚀 Boost your business with us. Advertise where 13M+ AI leaders engage!

🌟 Sign up for the first (and largest) AI Hub in the world.

📲 Follow us on our Social Media.