Welcome, AI Pioneers!

What would you do if your thoughts could control a screen? For Audrey Crews, the answer to that question just came true. Neuralink just crossed from theory into real, lived impact.

Meanwhile, Meta says you’ll fall behind without AI on your face, Google is pushing employees to skill up fast, and NASA is testing robot copilots for deep space.

📌 In today’s Generative AI Newsletter:

Neuralink enables a mind-controlled breakthrough

Meta frames AI glasses as the next major divide

Google mandates AI fluency across teams

NASA deploys an AI assistant aboard the ISS

🧠 Woman Implanted with Neuralink Device Writes Her Name After 20 Years

Image Credit: Audrey Crews

Audrey Crews, paralyzed since 16, controls a computer using only her mind. Neuralink’s latest brain-computer interface has allowed Audrey Crews to type and draw without moving a muscle. Implanted with the company’s N1 chip, she used thought alone to write her name for the first time in two decades.

What the implant enables:

Brain signals are captured in real time and translated into digital commands by AI algorithms

Cursor movement, typing, and drawing are now possible using intention alone

Crews shares her creations online, responding to followers with doodles made through pure thought

The device is quarter-sized, wireless, and designed for daily computer control

The procedure was performed at the University of Miami using 128 electrode threads embedded in her motor cortex. Crews is no longer locked in by her body and she’s creating again, shaping the screen with nothing but intention. The technology may be early, but its meaning is immediate.

🕶️ Zuckerberg Says AI Glasses Will Define the Next Cognitive Divide

Image Credits: Meta

On Meta’s Q2 earnings call, Mark Zuckerberg doubled down on a vision he outlined in his “superintelligence” blog post. He told investors that people without wearable AI interfaces will face a “significant cognitive disadvantage” in the future. The remarks come as Meta’s smart glasses gain traction and Reality Labs continues burning billions.

What Zuckerberg laid out:

Glasses are Meta’s chosen interface, enabling AI to see and hear through the user’s perspective all day

AI displays unlock new value, from wide-field holograms to low-profile overlays in everyday frames

Ray-Ban Meta sales have tripled, validating wearables as a viable product line amid heavy AR/VR losses

Reality Labs has lost $70B since 2020, with Zuckerberg now reframing it as R&D for AI-native computing

Zuckerberg thinks of eyewear as the interface that separates the augmented from the obsolete, but it’s Meta’s hardware strategy that stands to benefit most. The vision may be futuristic, but the incentives are old. If this is the next computing shift, it’s one Meta wants to manufacture, brand, and sell.

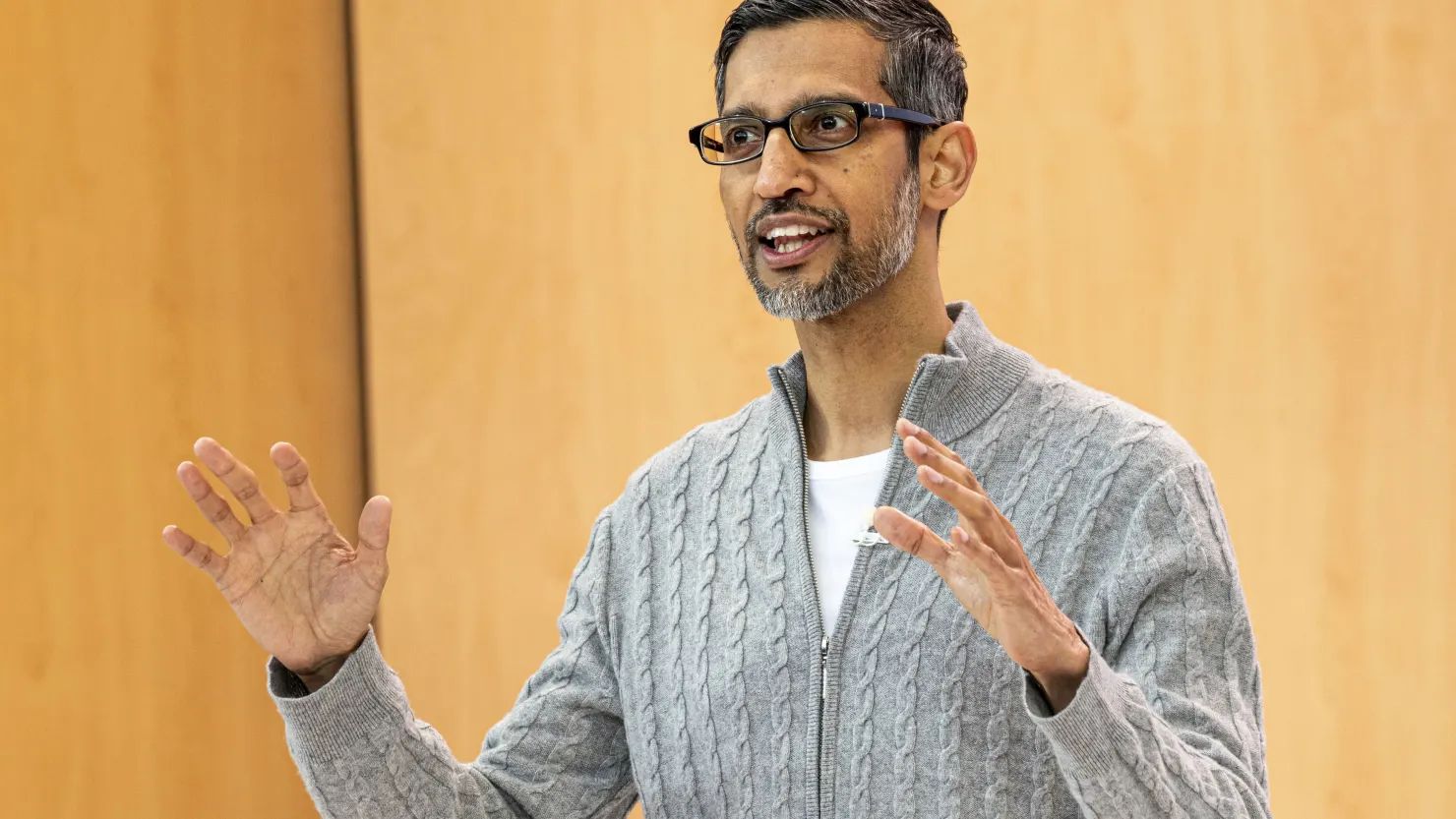

💼 Google Tells Staff: Get AI-Savvy or Get Left Behind

Image Credit: David Paul Morris / Bloomberg

At an internal all-hands, Sundar Pichai delivered a sharp message: Google's next leap won't come from headcount, but from how well employees can wield AI. With $85B earmarked for capital spending, execs say the workforce must match that scale with smarter output.

What’s unfolding inside Google:

AI fluency is now a mandate, with engineers urged to embed generative tools into daily workflows

New training programs like “Building with Gemini” and the internal site “AI Savvy Google” aim to upskill teams fast

Cider, an AI coding assistant, is already used weekly by half of early testers inside Google’s engineering ranks

Google’s $2.4B acquisition of Windsurf brings in key AI talent, including founder Varun Mohan, to supercharge internal tools

Pichai wants leaner ops, warning teams to do more with less and treat AI as a multiplier and not a novelty

The shift mirrors moves across Big Tech, where AI isn’t just a product but a mandate. Microsoft, Amazon, and Shopify have all told employees to embrace generative tools or risk obsolescence. For Google, this is less about trimming headcount and more about raising internal velocity.

🛰️NASA Tests AI Crew Support for Long-Term Missions

Image Credit: NASA

A new kind of crewmate is helping astronauts on the International Space Station: a floating AI assistant named CIMON. Roughly the size of a bowling ball, CIMON is part robot, part lab partner, and part cosmic secretary. Built by Airbus and IBM, it's been designed to understand voice commands, recognize faces, and support astronauts in scientific experiments without ever needing a snack break.

Here’s what CIMON’s doing in orbit:

JAXA astronaut Takuya Onishi activated CIMON in the Kibo lab this week to test its ability to command a separate free-flying robotic camera in a search task.

The demo is part of Japan’s ICHIBAN project, which evaluates AI's potential to reduce crew workload and free up astronauts for research and recovery.

NASA engineers are studying how CIMON responds in real time, measuring how well it can process commands, interpret the environment, and guide other machines.

The goal is to train AI systems that can automate routine tasks, provide emotional support, and help crews operate independently on long-haul missions to Mars and beyond.

AI assistants like CIMON are becoming test pilots for a future where autonomy is mission-critical. NASA’s experiments are setting the groundwork for AI copilots on lunar bases and Mars habitats, where isolation, latency, and endurance will demand machines that can think, adapt, and carry weight without constant human input.

🚀 Boost your business with us. Advertise where 12M+ AI leaders engage

🌟 Sign up for the first AI Hub in the world.